In the last newsletter, I talked about “agreeing on the facts”, separating between “facts, logical inferences, theories, ideas and value judgments”. We looked at facilitating political discussions, academic literature reviews, and fact checking non-fiction books. In this edition, we’ll look at news fact checking organizations, metadata, Wikidata, and some novel ways people using to compile information around the Coronavirus. We’ll conclude with some Roam community links.

During the past few years, the question of fact checking of political speech, and so-called “fake news” has been at the forefront. The actual effect of such “debunking” is debated (I think there is research showing that it’s not very effective in changing minds, at least not in the hyper-partisan environment in the US). But hopefully it’s useful to people who are somewhat rational, and interested in finding the truth, and yet easily fooled by erroneous information.

Michael Caulfield has done incredible work in teaching information literacy to young people for several years, and has often shared on Twitter how the heuristics taught by traditional institutions (like looking for blue checkmarks on Twitter) are not helpful. He wrote a great open book on web literacy, with a clear and tested approach for students to better understand credibility, and recently released a small site on fact-checking the Coronavirus (and also a Twitter account). His core framework centers around four steps:

Stop (think, reflect, question - do not share because you got emotional)

Investigate the Source (who are they really, what’s their expertise)

Find Better Coverage (news search)

Trace Claims, Quotes, and Media to the Original Context (go upstream)

So these are all things that individuals can do to become more critical news consumers, but what about the fact checking organizations (or volunteer networks)?

Continuing the discussion from the first newsletter, I am interested in fact checking discovery and reusability. If institutions or individuals put a lot of time into researching and fact checking specific claims in articles or books, are we beginning to establish a base of verified knowledge, or is it just more grains of sand on the beach?

I visited a well-known fact checking website, PolitiFact, and looked at one of their articles about the Coronavirus. In fact they have something like 20 articles about Coronavirus, all debunking specific articles or Facebook posts.

Opening the actual article, it’s a long narrative with many links, and finally a “verdict”. In the 17 articles above, there must be a very large amount of claims, statements, links to evidence, logical chains etc. It would be interesting to try to present this as some kind of a map - why not start with what we know about Coronavirus, and then various specific debunking articles could link to this article. This would mean that we would have a single place to go for updates, tracking data over time (amount of sick people, government policies etc)?

Metadata to the rescue? schema.org

Not only are these kinds of fact-checks not well organized on a single site, but imagine searching across multiple fact-checking sites to find out whether a claim has been debunked or not. In addition, Google, Facebook and YouTube wanted to automatise that so that they could automatically link to debunking information when someone posts fake news.

This is where the ReviewClaim schema from schema.org comes in. They provide a way for publishers to insert structured machine-readable microdata on their website, which could look like this:

{

"claimReviewed": "Four years after Gov. Andrew Cuomo promised universal pre-K, 79 percent of 4 year olds outside New York City lack full day pre-K.",

"itemReviewed": {

"author": {

"name": "Cynthia Nixon",

"jobTitle": "Candidate for New York Governor",

}

},

"reviewRating": {

"alternateName": "Mostly True",

"ratingValue": "5",

"bestRating": "7",

"worstRating": "1"

}

}As you will notice however, there are many details missing in the snippet above, such as where exactly Cynthia Nixon made this claim? We could use annotations of the source material, and Jon Udell from Hypothes.is has a great post where he describes how Hypothes.is annotations could be used to make much more detailed claim specifications (video).

There is also work underways to expand ClaimReview to add MediaReview, which focuses on video and images, introducing a controlled vocabulary around videos that have been transformed, edited, etc. (See this case study around two stories, one on Biden and one on Obama.) We have been dealing with photo manipulation for decades, both cropping (see the famous example below, courtesy of Mighty Optical Illusions), but with the advent of more sophisticated video editing/manipulation software, and things like deep fakes, things become even more confusing.

Unfortunately there is very little effort at addressing evidence and credibility, other than to trust the “authoritative” (newspaper) sources issuing these statements, and their custom rating systems. Despite this (glaring) shortcoming, let’s see if these metadata standards actually promote reuse/findability.

In use

So where are these annotations being used? I first came across RealClearPolitics.com, which seems to pull in fact check claims from US sources. As a test case, I thought I’d look into claims around China, of which they have a number, but all of them focused on US political figures. The rating systems differ for each platform (four Pinocchios, for example), and the only way to see the evidence is to visit the – often pay-walled – original article. Yet, it’s a beginning. I was almost more excited about the “mostly true” claim than the debunked ones, because I thought this might the beginning of a factual building block. Could we now have a canonical source to link to whenever we claim that China burns 7-8x the amount of coal that US does?

(Ideally this would be a source that got updated when new evidence was added, specified that this claim was valid in the period 2018-2020, but not after 2020, etc).

Then I hit gold - I found the Google Fact Check Explorer. This is much faster, and international. Suddenly we get all kinds of interesting fact checks, like this one:

Seeing all this effort expended on Facebook posts does remind me a bit of the famous XKCD-comic, but I guess it’s in the public interest. Random thought: They should have taken the extra step of updating Wikipedia, but on the other hand, where on Wikipedia do you point out that larger butts do not lead to smarter kids? Under [[Butts]] or [[Infant intelligence]]?

You know that when there is semantically encoded markup, someone will write a bot – and that’s what Reporterslab did, they created a bot that could provide live fact checking during political debates. Apparently they are now redesigning it to put “a human back in the loop”…

I also came across several organizations that aim to combat misinformation on the internet, like the Credibility Coalition, which was founded a few years ago, and doesn’t seem to have done a whole lot, other than producing a useful list of other organizations in the same field, the credcatalog. A related organization/blog is Misinfocom, which just held a conference.

Wikimedia/Wikidata to the rescue

As I was thinking about these fact checks, and reusability, I dreamt about having something like a Wikipedia for facts, where each fact would have a clear unique URL, where volunteers could curate resources and arguments, and more complex arguments could build on simpler chains of argumentation. Wikipedia articles are of course ideally made up of facts, but it’s in a very narrative and topical form, and all you can do is link to a top-level topic (page), not a specific claim. There’s also a prohibition against original research.

On the other hand, you have Wikidata, which is very intriguing project to build a semantically structured database of the world’s knowledge. In Wikidata, everything is an unambiguous concept with its proper unique identifier. So “Oslo (the city)” has it’s own ID, but the concept of a city also has an ID. So you can say that Oslo (Q585) is connected to the concept city (Q515) through the property is an instance of (P31), and ideally that’s an unambiguous statement.

You can even qualify it by saying that this has only been true since a certain year, and you can provide evidence for this particular relationship, or even specify that it is contested (and provide evidence for both conditions - being a city and not being a city). However, the evidence is limited to citing sources, and not building up an argumentation graph.

In practice, I did not manage to find many interesting examples of Wikidata articles with contested facts that were well sourced. Here’s an example of Chelsea Manning’s page, where the fact of her name has changed over time.

Mapping arguments and evidence

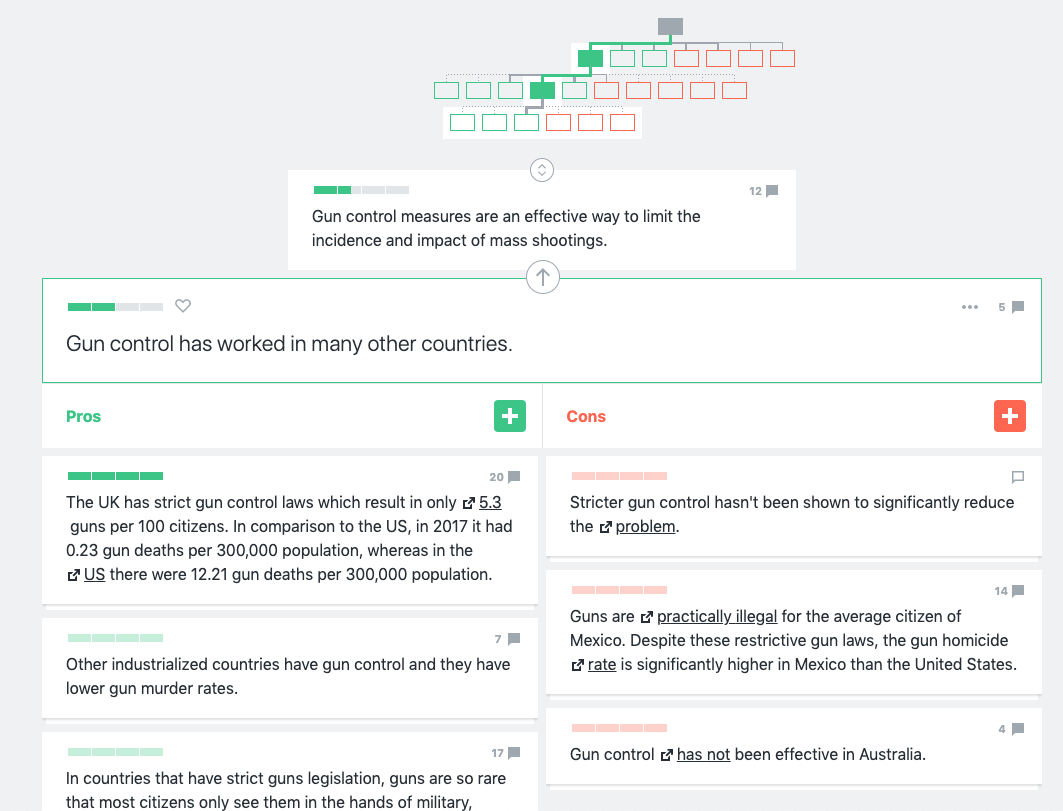

So Wikidata offers semantic facts (and in future newsletters we’ll see that this can be very powerful), but does not let you build a chain of evidence. A tool that aims to do the latter is Kialo, a platform that hosts more than 11,000 “debates”. The debate structure seems a bit similar to the Argument Mapping we talked about in issue 1, but arguments are presented as a tree. You start with a position, supply arguments for or against, provide evidence for or against those arguments, and so on - turtles all the way down.

A problem with this tool is that it does not distinguish between facts and opinions (or values), and there is no way of distinguishing sources (other than mentioning them in the comments). The simple Pros and Cons interface also lumps together “you linked to a refuted scientific article”, with “your logical assumptions are faulty” and “I don’t agree that a human life is infinitely valuable”.

However, unless they are all bots, the number of users seems to indicate that people find this useful - but as a way of understanding issues more deeply, or more as a stage to try to convince others of your own political convictions?

Coronavirus: a new urgency

The rapid spread of the Coronavirus has led to a huge interest in understanding the news and science, in a situation that is far more complex than figuring out whether a presidential candidate correctly critiqued an economic policy. Because of its global nature, data is coming in from all around the world, with people often linking to newspaper sources or retweeting things that are very difficult to verify. The news changes very quickly, and much of the news consists of predictions and assumptions. There is a combination of epidemiological data (how many have tested positive at a certain location) with government policy (are train stations in Italy closed) to local citizen conditions, to understanding the science and research around the virus.

In addition to all of the existing fact checking and media organizations, there have been some novel initiatives, some aiming at speeding up the process of sharing research, such as pre-prints, or the OpenKnowledgeMap about Coronavirus related research. Science Mag writes about how the Coronavirus changes how researchers communicate.

ESRI have written about responsible geographical mapping of the virus, Worldometers is providing live up-to-date counts of infected around the world, and Conor White-Sullivan has started a public Roam instance to map knowledge about the virus.

Please share any other innovative approaches to fact checking or reusable facts, related to Coronavirus or in general.

Roam community news

Khe Hy has recorded a great video, talking about creativity and serendipity, and Roam as a “magical junk yard”. Venkatesh Rao has written A Text Renaissance - great essay about Twitter storms, Substack, Roam and more.

Other videos:

“How to journal and improve your thinking with Roam Research” by Roman Rey

“Roam: Creating a Resonance Calendar in Roam Research” by Shu Omi

“Study with Me: Taking Reading Notes in Roam Research” by Shu Omi

“Roam Research - Conor White-Sullivan (Antipessimist)” (1:45 hr discussion with Roam founder)

Other links:

RoamBrain.com - new Roam unofficial community site by Francis Miller

Roam plugin for Anki (automatically create cloze questions by using {word to hide} in Roam)

This is a great review of the state of fact-checking! The Wikidata, Kialo, and Google Fact-Checking tools are encouraging signs.

Concerning reusable facts, factual building blocks, and using them to construct argument chains and bodies of knowledge as discussed in your previous post, I am very keen on "evees," which are just such blocks. https://www.uprtcl.io/

Bi-directional links will connect claims, citations, evidence, and fact-checking (all evees) across platforms. Updated information will flow downstream and fork into new branches. Ultimately, statements will evolve in the marketplace of ideas. For journalists and researchers who care about the truth, it will be much easier to discover.

But most people do NOT base our strongest beliefs on logic or evidence, and are even less likely to change them on that basis.

“We don’t have a misinformation problem, we have a trust problem.” (regarding vaccine hesitancy): https://www.nytimes.com/2020/10/13/health/coronavirus-vaccine-hesitancy-larson.html - Rumors evolve. More had to be done to engage people with doubts, and not merely dismiss them. “People don’t care about what you know, unless they know that you care.”

People formulate beliefs based not on evidence but on webs of trust. Evees will be used to construct webs of misinformation as well as facts. It will be interesting to see how they compete with each other. How will each garner trust?